Launching The iPhone 13 With An AR Treasure Hunt

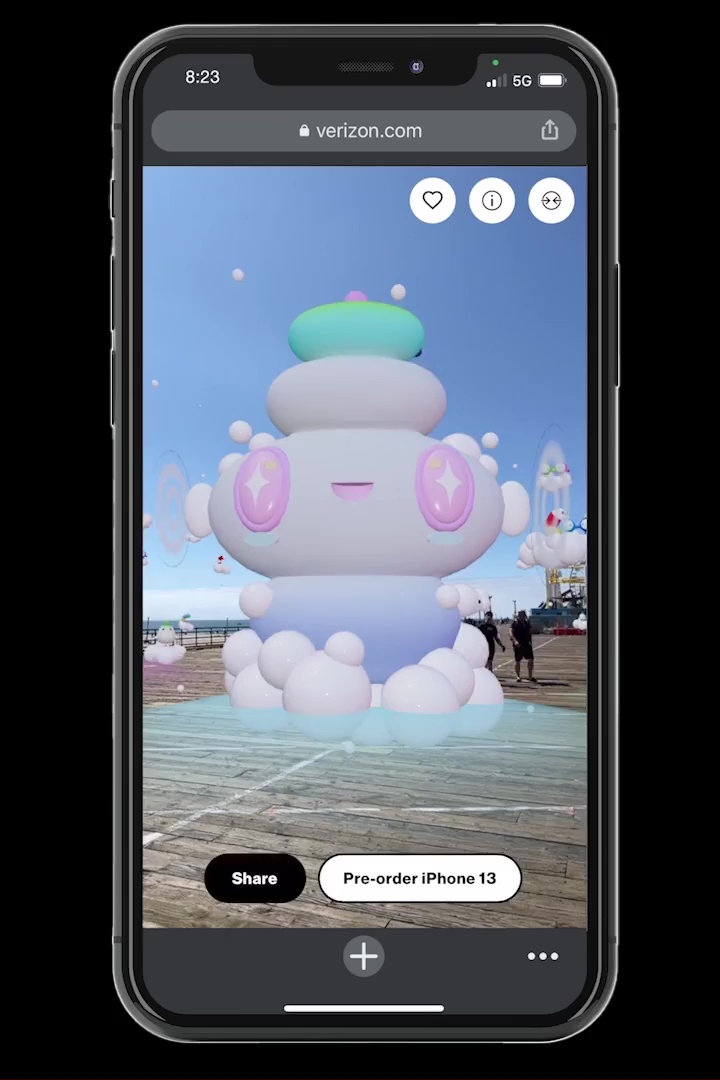

For the launch of the iPhone 13, Tool collaborated with R/GA and Verizon to create a first of its kind treasure hunt hidden in the metaverse called H1DD3N. We developed a large-scale AR installation that allowed users to experience a magical playground to find the Hidden iPhone 13. Users can help grow this AR world by adding their own unique characters to the public installation.

The collaboration featured the newly released track “Darling”, from Grammy-nominated artist Halsey, as well as a partnership with the pop art collective FriendsWithYou, whose playful characters and world were the backdrop to this metaverse inspired AR experience.

The user experience itself was centered around an AR treasure hunt, where users explored the world and interacted with characters, in search of a The Hidden 13, and the chance to win the new iPhone 13.

To reach users everywhere and allow this experience to extend across realities, we created 2 versions of the experience, one for users everywhere and another geo-fenced experience that worked at installations in 5 public locations around the country.

The At-Home Experience

The campaign was widely promoted on Twitter, taking over share of voice on the internet via a well executed media, press and social strategy. When users arrived at the WebAR experience, they were immediately shown an animated video, introducing them to the characters created for this campaign as well as the premise for the At Home experience; Customize your Friendsie, add it to the public art installation and share on Twitter for a chance to win.

A gamified character customizer was presented to the user in AR, allowing them to see and interact with the characters in their actual environment. Intuitive mobile UX allowed users to swipe to select from our 6 hero characters, and to tap to change up their looks. Once the user customized a Friendsie to their liking, they were prompted to ‘Add your Friendsie’ to the AR art installation.

The user was transported with their Friendsie from their living room into a mobile VR experience, as they witnessed their Friendsie fly across realities and ultimately land in our Metaverse. The user could then look around and explore the AR H1DD3N-world of Bryant Park, populated by a vast array of other FriendsWithYou characters, and listen to Halsey’s new song “Darling.”

The user concluded the experience by sharing their FriendsWithYou character art on Twitter for a chance to win an iPhone 13 Pro.

“We were really building the world’s first work of art across realities. Something that challenges the boundaries and gives people an experience they can do right from their phone, no matter where they are.”

-FriendsWithYou

"When artists across numerous genres come together, the synergy can be so inspiring. As soon as I saw the first renderings of the characters FriendsWithYou was creating, I knew 'Darling' would be the perfect song to exist in that world with them."

-Halsey

The On-Location Experience

Across 5 cities, in highly populated 5G locations around the US, we installed 8ft monoliths that allowed users to experience the AR treasure hunt:

- New York - Bryant Park

- Los Angeles - Santa Monica Pier

- Seattle - South Lake Union

- Miami - Peréz Art Museum

- Chicago - Maggie Daley Park

As people discovered these mysterious monoliths, they followed the instruction written on it: “Scan the QR code for a chance to win.” Through the seamlessness of WebAR, no app was required, and they immediately found themselves in an immersive AR world.

We created an entire world complete with a cloud palace, 5 cloud islands with hero characters, hundreds of Friendsies added by users at home, and 6 hidden opportunities to win an iPhone 13. The entire AR installation was approximately 75 feet in diameter and 30 feet tall, which grabbed users’ imaginations and enticed them to walk around and explore the entire installation as they looked for the hidden elements.

We created an entire world complete with a cloud palace, 5 cloud islands with hero characters, hundreds of Friendsies added by users at home, and 6 hidden opportunities to win an iPhone 13. The entire AR installation was approximately 75 feet in diameter and 30 feet tall, which grabbed users’ imaginations and enticed them to walk around and explore the entire installation as they looked for the hidden elements.

In this scavenger hunt, users were able to freely explore the ‘H1DD3N’ environment by walking around the park and interacting with various FriendsWithYou characters - each of which represented a different feature of the iPhone 13. While interacting with these larger-than-life Friendsies, each character could potentially reveal the Hidden 13.

Revealing the iPhone 13

Hi, I’m Halsey! Tap me for a performance. Experience Halsey like never before

With the new A15 Bionic chip - the world’s fastest smartphone chip - iPhone 13 Pro delivers more immersive experiences.

Howdy! Tap me to see where updated performance can take you. Never miss a moment

With huge leaps in battery life on iPhone 13 Pro, you can stay powered up and untethered for longer.

Burp!

I mean, hello! Tap me to check out my bigger, better capacity. AR, VR and beyond

I can handle superfast downloads and high-quality streaming thanks to superfast 5G on the iPhone 13 Pro.

We’re Beep & Boop!

Tap us to see how you can stay connected. Connect with everyone

With superfast 5G on iPhone 13 Pro, we can handle superfast multiplayer gaming and stay linked, even in massive crowds.

Creative Production

Tool collaborated closely with FriendsWithYou to take illustrations of their characters and the world they envisioned, and bring it to life in a rich and animated 3D environment. We developed a WebAR asset pipeline to minimize implementation time and maximize the ability to iterate and ensure maximum performance in browsers.

We worked with renowned studio Plan8 to create an imaginative and reactive sound experience that further brought to life the world which had been designed, and to do so in a way that fit elegantly with the hero soundscape of “Darling” by Halsey.

WebAR is an emerging medium that presents a huge opportunity for advertisers to create frictionless experiences accessible from a browser in a Tweet or a QR code on an installation.

By using WebAR for this experience, we pushed the technical limitations of the browser and mobile devices to the limit, implementing and perfecting best practices for world tracking, anchoring elements to real-world positions and refinements to UX to allow users to recenter the AR world when needed.

Tech Approach

We used geofencing to enable the AR experience for users who were present at each of the 5 locations. Users were redirected to the At Home experience if they weren't on-site, in one of those geofenced areas.

We leveraged 8th Wall and Three.js to develop both experiences. The main challenge for the At Home experience was how to create a seamless transition from a Web AR (6DOF) environment to a WebVR (3DOF) experience that transported the user from their home to a virtual representation of Bryant Park. To accomplish this, we used 3D objects, animations, and shaders to mask the transition from the pass through camera, into a VR environment where the virtual world appeared around the user.

The main challenge for the On Location experience was the perception of scale. The limitations of the AR technology didn't allow for depth of field or accurate ground shadowing in this instance, meaning that distant objects would just appear to be tiny, as opposed to far away.

To solve this, we started by positioning the user close to the largest AR element, when the experience was launched. This starting point acted as a visual reference for the user, and we gave international motion to distant elements in the sky; as the AR elements passed by and oculded each other, the parallax effectively gave a sense of distance.

Lastly, the other important challenge we had to overcome was the deadline. To accomplish our ambitions on how many elements, animations, and interactions we wanted to add, we needed to set up a clear workflow that let the 3D artist and developers work and advance in parallel without blocking each other. The main idea was to lay down an object structure to be followed by the 3D artist and stuck as close as we could to this to prevent losing a lot of time while swapping assets. Each asset had 3 stages, blocked, animated, and cleaned; the first stage was the only blocker for the developers, but the most important to unlock the rest of the workflow, on this stage, we set up the name convection, the group, and mesh hierarchy, the animation track names and the null objects needed to code some interactions. Once the first stage was completed the dev team could implement the asset and continue coding interactions only needing to drop and replace 3D assets every time there was an update on the animation or cleaning stage without having to redo part of the code. We choose to use GLTF as the format for our 3D assets as it not only provides a lot of optimization and compression, allowing us to have more detailed objects with higher polycount, but it also provides a structured file that can be easily tweaked by the development team through code so the 3D artist could keep working on more urgent matters.

The at-location experience had a higher number of assets which made us pull some tricks to optimize the experience and secure a good frame rate. One of these tricks was using null-points and instanced-mesh for a lot of our cloud-shaped elements, this way we could export the GLTF with null points for position, scale, and rotation, but without the 3D geometry, and then use the instanced-mesh to place a high poly sphere on each of these null points, this technique not only reduced the number of render calls but it also provided better visual quality.

We used the same technique for the custom characters floating far away, but it needed some tweaking as they were made out of multiple geometries and the instanced-mesh object accepts only one geometry, we accomplish this by creating one instanced-mesh for each chill geometry on the GLTF file (our object convention proved helpful here too) and adding it to a collection that we would be used as the main parent for future transformations (scale, rotation, and position).