Why

To promote the release of Ingress Prime, Niantic Labs wanted to imagine the future of Artificial Intelligence in a campaign to recruit new players to the game.

So we created ADA vs. JARVIS, a multi-platform story that blurs the line between the exciting fiction and sobering reality of what is possible in AI.

How

We created the story of ADA and JARVIS, 2 AIs brought to life by their factions in an attempt to gain an advantage in the ongoing global war to control XM.

The AIs started life trained on only a small amount of data and would need to learn much more to be able to speak to the people and create propaganda to inspire them to join the cause.

So they began conversations with the community to learn, and over time, they evolved their ability for language. They generated and posted hundreds of propaganda visuals on social media and, through feedback from the community, they explored and evolved their creative sense.

The overall experience reached millions of people around the world, hundreds of thousands of them contributed to the training and participated in the experience, and 2 AIs grew up before our eyes.

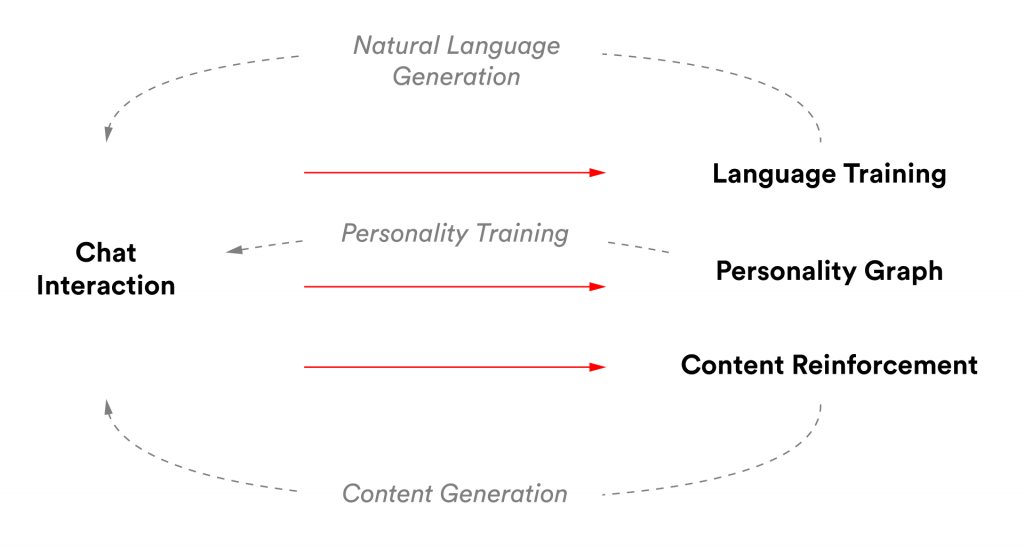

To bring this story to life, we designed and built an entire ecosystem consisting of natural language generation, chat interactions, personality graph, parameterized video generation, user reinforcement system and more, weaving the entire story together. We then built a full-featured admin tool for the client to schedule, script, moderate, publish and test each piece of the campaign to help guide the story from start to finish.

Ingress

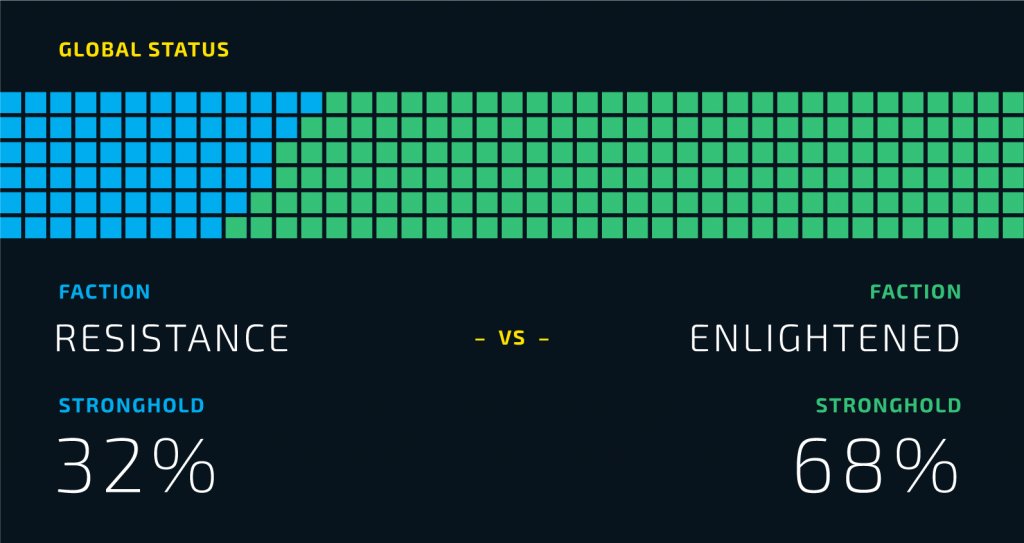

Ingress is a mobile AR game launched by Niantic Labs (the makers of Pokemon GO) in late 2013 that immerses its players in an alternate reality where 2 global factions are at odds in the fight to control the fate of humanity. Players converge around real world AR locations, scattered across the entire globe, with their mobile devices in effort to control of Exotic Matter, and by extension, the human race.

Chat Interactions: The system was constructed to continually learn by harvesting text from chat interactions causing our AIs’ voices to evolve and, over time, more and more closely resemble the speaking style of their faction. These training sessions gave users a way to directly interact with and influence the growth of the AI, and by translation, help the cause of their faction.

Natural Language Generation: Natural Language Generation (or NLG) is a subfield in AI that focuses on imitating human writing using a neural network trained on a large corpus of curated text. We used NLG to give each AI a voice, and with their voice they created headlines for propaganda visuals and filled out the conversation during their chat interactions.

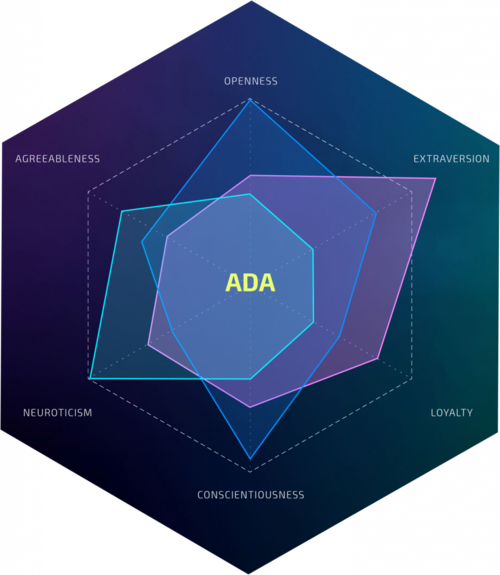

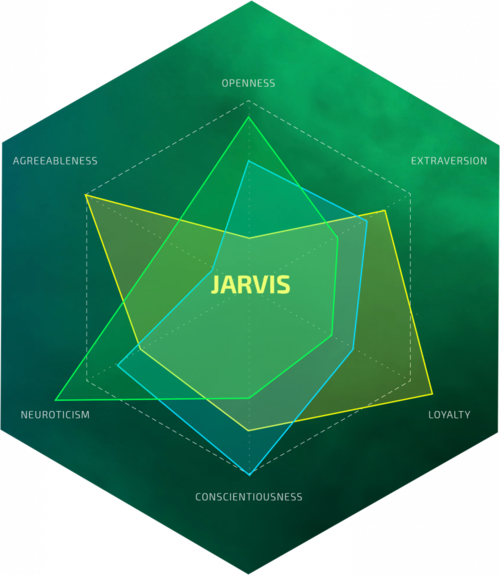

Personality Graphs

This “personality graph” was incrementally trained in real time by using a simple gradient descent type function, the training data being provided by specific multiple choice image questions within the chat experience (i.e. which of these images best represent the faction?).

These answers were delivered to the personality graph system in real-time, mid conversation, causing the personality to be a living breathing, always changing aspect to each AI.

Each AI has a “personality” and that personality should influence the way it makes choices and expresses itself.

And though it is possible to infer personality from language, it is generally inefficient, so we decided to create a separate and simplified representation of the AI personalities in a graph of traits.

Content Generation

The content generator was the engine that outputted all imagery in the campaign. When one of the AIs was “creating content”, behind the scenes the content generator ingested the current personality graph of that AI, a set of fresh NLG headlines and a set of content configuration (to provide the client with an ability to guide the overarching story).

It then assembled and delivered a range of generated images as options, which were then curated before being sent into the wild.

This process happened multiple times per week throughout the campaign as each AI “experimented with and learned how to create the best content” resulting in a large library of generated imagery.

Being aware of this volume before hand, we designed a system that would provide enough possible combinations of visuals and effects to not only avoid repetitiveness but also provide a creative range for the AIs to explore in creating propaganda content.

Thousands of images and background videos were curated and prepped to be in the resource pool for the AIs to pull from. Each image was tagged with its own personality graph values so at the time of image selection, the assets with the closest graph values were selected to use in the image. The underpins the importance and precision of the personality graph. Slight variations could lead to entirely different imagery selected for generated content.

The personality graph and the NLG headlines provided a good window into how each AI was evolving, but provided basically no controls for guiding the story of each AI. For this purpose we added some manual configuration to the content generator. Over time the client could use the content configuration to simulate the AIs experimenting with different image decisions, such as the number of assets, different animation and repeat patterns, etc. aspect to each AI.

Reinforcement System

After a propaganda image was in the wild, it was looped back into the training chat experience in a multiple choice question. As users voted for one piece of propaganda content over another, a simple neural network was trained on the “successful” values of certain image properties. Over time, this system helped optimize the effectiveness of the propaganda content.

With the combination of these approaches the generated propaganda content was often unsettling and compelling, drawing users in with unexpected and stirring combinations of words, imagery and effects.

Campaign Controller

There were many more processes and services working in concert than the 3 described above to make this ecosystem function. To properly orchestrate all of these pieces we created a campaign controller to schedule, moderate, configure, test and publish for the entire campaign. From here all significant dates in the timeline, content and copy for the website, content configuration for the content generator and chat scripts were stored and managed.

Testing tools for critical services are housed in the campaign controller. The moderation and publish systems are made available. Even the entire collection of accepted and rejected content are housed here to enable quick fixes. With this tool, the client was able to oversee all aspects of the ecosystem and potentially even add chapters for the story to continue into the future.